The computers have the ability to see images in objects in a way that artists can only dream of replicating. Therefore, to the computer, one of the defining features of a dumbbell is the arm that lifts it.

If you type "dumbbell" into Google, images of these objects are intermingled with pictures of weightlifters holding them. While the computer is clearly incorrect in thinking that dumbbells are sold complete with a human arm, it can be forgiven for thinking that this is the case. You may have noticed that no computer-generated dumbbell is complete without a muscular weightlifting arm. Google's artificial neural network's interpretation of a dumbbell. Take this picture of a dumbbell, for example: Sometimes, the resulting images are not quite what you'd expect. The images in the picture above were created in order to ascertain whether the computer has understood this sort of distinction. But things like size, color and orientation aren't as important. They gave the artificial neural network an object and asked it to create an image of that object. The computer then tries to associate it with specific features. When we want a picture of a fork, the computer should figure out that the defining features of a fork are two to four tines and a handle.

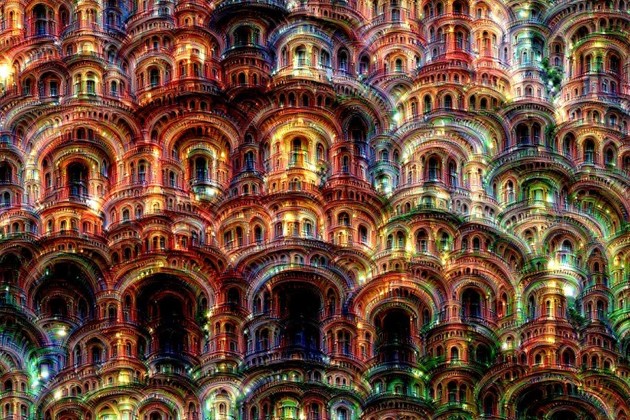

The Google team then realized that they could reverse the process. This is the process behind image-recognition. The neural network then tells us what it has valiantly identified the object to be, sometimes with little success. This process is repeated 10 to 30 times, with each layer identifying key features and isolating them until it has figured out what the image is. This layer then 'talks' to the next layer, which then has a go at processing the image. When the engineers feed the network an image, the first layer of 'neurons' have a look at it. The Google artificial neural network is like a computer brain, inspired by the central nervous system of animals. So, what's the point in creating these bizarre images? Is it purely to find out our future robot overlord's artistic potential, or is there a more scientific reason? As it turns out, it is for science: Google wants to know how effectively computers are learning. Google's artificial neural network creates its own images from keywords. The result of this experiment is some tessellating Escher-esque artwork with Dali-like quirks. This conclusion has been made after testing the ability of Google's servers to recognize and create images of commonplace objects – for example, bananas and measuring cups. and also pig-snails, camel-birds and dog-fish. And it turns out that androids do dream of electric sheep. Software engineers at Google have been analyzing the 'dreams' of their computers.

0 kommentar(er)

0 kommentar(er)